By Kevin Moore on 28 Feb 2017

So here we are, two months down in 2017 and it looks like Google are warming up for an interesting year full of surprises, with SEO treats in store for website owners and webmasters alike. With the first official Google update of 2017, we were introduced to the ‘Intrusive Interstitial Penalty’ on the 10th of January. This addresses aggressive interstitials and pop-ups that might damage the mobile user experience. This makes sense considering 2017 is set to be 'the year of mobile' (how many times have we heard that before?) The Irish weather outside is cold, damp, dismal and devoid of a consistent climate, but if you were to check in on some of our favourite algorithm update tools you'd see that in the online world, things are steadily heating up!

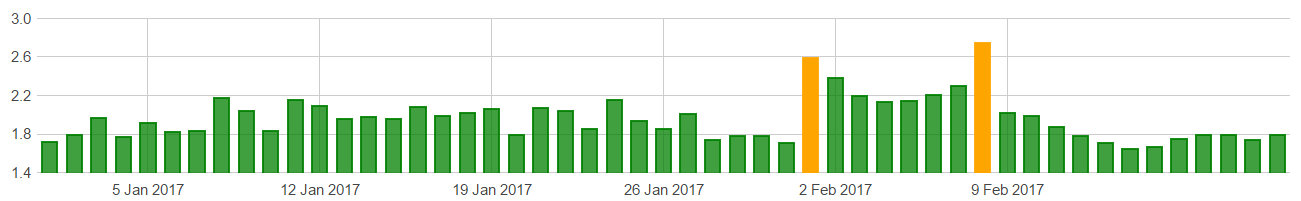

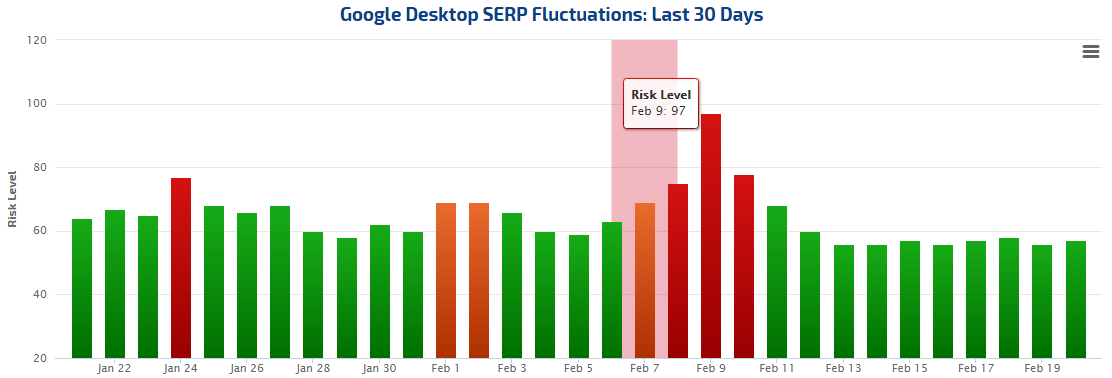

For example, check out Algoroo from our good friends down under in Dejan SEO and RankRanger both showing some interesting movement in the Search Engine Results Pages (SERPs) in February.

Source: Algoroo

Source: Algoroo

Source: Rank Ranger

Algoroo is showing some high temperatures both on the 2nd of February and the 9th of February, while Rank Ranger is showing a Risk Level of 97 on the 9th of February. What these high temperatures are showing us is high volatility in Google's search results, where keyword rankings do the ‘Google Dance’. The more movement recorded, the higher the probability that Google have released an algorithm update. It's believed that the movement on the 3rd of February was Penguin-related, focusing on spammy link practices. The movement on the 9th of February, which appears to have been more significant was initially associated by many in the SEO world to be a Panda update, (content related).

Anyway, I digress, we haven’t even got started on this month’s SEO down-low and I'm already running away with myself!

This month we'll be exploring the ‘Groundhog’ update in greater detail, delving deeper into the initial suspicions along with some industry influencers' take on this update. We will then look at an interesting trend within the local SEO vertical — optimising your local business for the term ‘near me’. Finally, we are going to give our readers the old one-two on ‘crawlability’ an SEO term we speak about on a daily basis, but a concept our clients can sometimes find difficult to grasp due to its sheer ‘nerdiness’. So, as always, pop the kettle on, grab the biggest mug in the kitchen, throw on some headphones and get lost in this month’s mega SEO digest!

February SERP Volatility

February is generally a short, uneventful month as we get back into the swing of things post-Christmas, but it looks like the guys in Google never stop slogging away to improve the quality of their results pages. We know this because of the significant amount of volatility in the SERPs we've seen between the 7th to the 9th of February.

Early calls from Bill Slawski, an SEO legend who makes it his business to keep on top of not only the algorithm updates, but also every Google patent request, coined it the ‘Groundhog’ update (due to the time of year). Groundhog is related to link quality. Now before you say Penguin, hold your horses. Penguin focuses on webspam. According to Bill, ‘Groundhog’ looks at traffic from links and dwell time post visit. If you head over to Bill’s in-depth article on his website Go Fish he explains that a Google patent that was lodged way back on December 31, 2012 had just been granted on the 31st January 2017. The reasons for this association was simply the close proximity between the algorithm update and the patent being granted.

Google Patent:

Determining a quality measure for a resource

Inventors: Hyung-Jin Kim, Paul Haahr, Kien Ng, Chung Tin Kwok, Moustafa A. Hammad, and Sushrut Karanjkar

Assignee: Google

United States Patent: 9,558,233

Granted: January 31, 2017

Filed: December 31, 2012

Abstract:

‘Methods, systems, and apparatus, including computer programs encoded on a computer storage medium, for determining a measure of quality for a resource. In one aspect, a method includes determining a seed score for each seed resource in a set. The seed score for a seed resource can be based on a number of resources that include a link to the seed resource and a number of selections of the links. A set of source resources is identified. A source score is determined for each source resource. The source score for a source resource is based on the seed score for each seed resource linked to by the source resource. Source-referenced resources are identified. A resource score is determined for each source-referenced resource. The resource score for a source-referenced resource can be based on the source score for each source resource that includes a link to the source-referenced resource.’

All the chatter in the early days was around link quality signals, including noise from SEO gurus, Barry Schwartz aka ‘Rusty Brick’ and Glenn Gabe. Barry discussed it within 24 hours of the initial rumblings over at Search Engine Roundtable and inferred it was a link related update.

After a few days of the data crunchers examining their Google Analytics accounts, some with access to numerous clients, SEO advocates emerged in an attempt to explain why certain websites were winners and some were losers (as always with an algorithm update). Glenn Gabe released a whopper article over on his website where he agrees with us here at Wolfgang that Winter 2016/2017 has been one of the most volatile seasons in the Google stratosphere since the dawn of the big G.

Glenn, who is an expert at analysing Google updates and an authority on the subject, who we really respect, went on a deep dive of some client accounts. We’ll show you the impact some of his clients experienced, both positive and negative.

Positive Visibility Movement:

Negative Visibility Movement:

Not only did he notice big shifts within the search visibility of some of his clients, but he reported that the rankings also danced around like crazy. Some sites were privileged to see ranking jumps of up to 20 places, while the other not so fortunate websites experienced negative declines of similar amounts.

One of the main topics Glenn explores is the issue of “quality user engagement”. This is an area Google are very interested in. It's basically the experience a user has when they land on your website. The SEO team in Wolfgang believe in this also, putting a strong emphasis on the technical elements of a website to ensure SEO success. Some elements Google treat as negative user experience include; deception for monetisation purposes, aggressive ad placement, broken UX elements and aggressive interstitials.

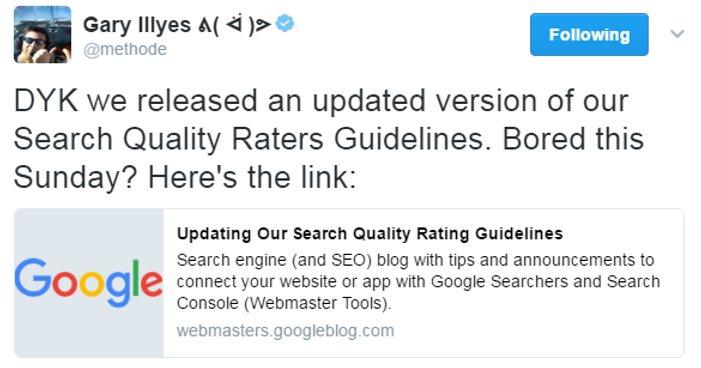

Glenn identified that around the time of all this SERP volatility, Gary Illyes (Google) had tweeted a DYK (Did You Know) about Google’s Quality Rater Guidelines informing the SEO world that these had been updated. Now, the significance of this was not the tweet itself, but the fact that they had been updated back in March 2016, leading to Glenn’s natural suspicion that this was related to the update. Even more interesting still, when Glenn dug deeper into the ranking changes for his clients, he found that the terms that dropped were associated with pages that lacked relevance to the term. He also noted that the other results that leapfrogged these appeared to be more relevant — pointing to a Panda update.

‘The relevancy of those search results had greatly increased. Actually, there were times I couldn’t believe the sites in question were ranking for those queries at one time (but they were prior to 2/7). Sure, the content was tangentially related to the query, but did not address the subject matter or questions directly.’

However, the Panda did not act alone, it appears that other quality refreshes were made to the algorithm, bringing Glenn to a new level of suspicion and reintroducing us all to the ghostly update known as ‘Phantom’. Daniel Furch explores this latest explanation over at Searchmetrics and again looks at some of the historical Phantom winners and losers, with some great graphs and screenshots of search visibility.

For the fifth time in Google history, the Phantom has appeared! The Phantom was first rolled out in May 2013 and has been updated in 2015 and 2016, with this ‘Phantom V’ the first in 2017. Phantom is described in the industry as being like Panda but different. It hones in on content signals, such as dwell time, usability and user experience in an attempt to satisfy the user intent behind searches thus empowering Google to strengthen their SERPs. Worried? Don't call Ghosthunters just yet. Our advice is to run a technical and content audit on your website, fix UX issues and update the content on pages to answer the questions that users are asking, make sure you put the time and effort into your content creation, so that the signals sent back to Google are positive. Do this and you can’t go wrong.

Optimise For 'Near Me' Searches

Since the early days of the internet, ‘best’ was the golden word that websites optimised for in the search engines. Searchers would type in ‘best coffee shop’ or ‘best hairdresser’ and whoever was optimised for this was the winner.

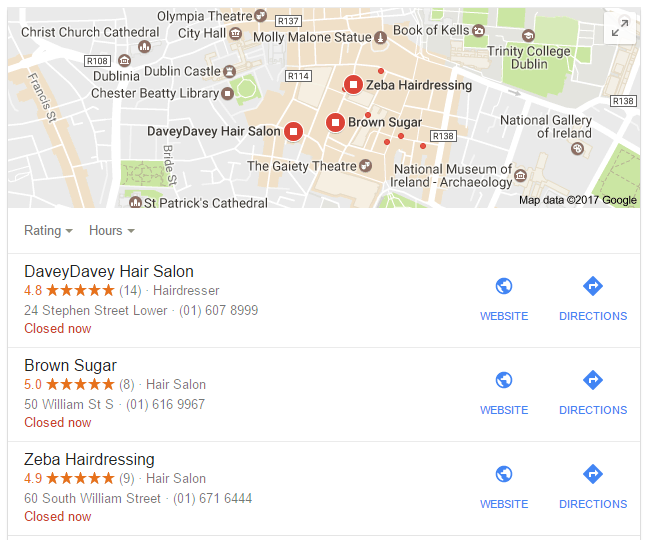

Let’s put this to the test. I am currently in Blessington, Co. Wicklow and when I search ‘best hairdresser’ in Google, the local pack result I get is as follows:

Right here, right now, this is no good to me, I am nowhere near Stephen St. or William St. in Dublin city centre.

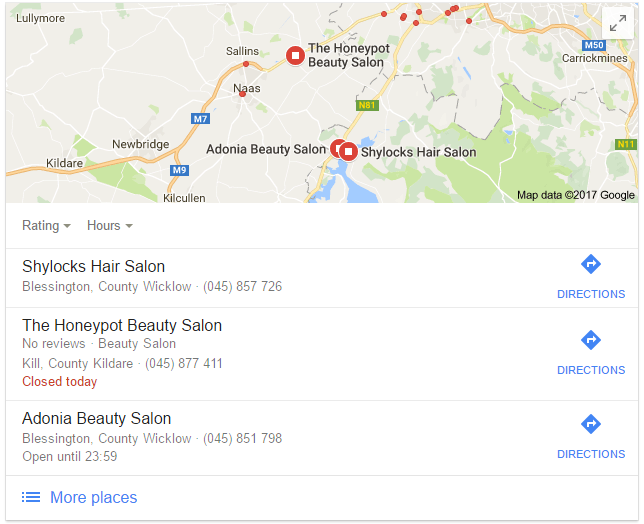

Now when I type, ‘hairdresser near me’ into Google, this is the result:

Closer, definitely more useful to me – thank you Google, you are giving me what I want in a rapid fashion. As Matt Cutts says, in the video below (2:00 minutes in), Google strive to give the best and most useful results they can possibly give to you, their customers. This is definitely a great insight for any business who depends on local SEO.

Let’s dive into this a little deeper, it’s all to do with local intent and the general increase in smartphones ownership and usage. Surfing the web has progressed from getting home in the evening and whirling up the old faithful modem and staring at a laptop or desktop screen to search the internet. You only need to hop on a bus, train or the Luas and everywhere you look people have their face glued to their mobile phone (phone being an incorrect name for the device, more like ‘mobile Google’). This phenomenon, which has exploded over the past three years, has forced Google to change direction in a massive way. With their recent ‘Mobile First’ indexing approach and algorithm updates, websites and SEO’s need to be thinking mobile first, desktop second, check out this white paper from Tryzens on the many important factors of ensuring your website is mobile friendly.

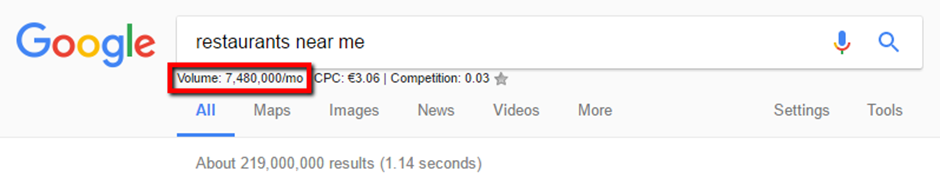

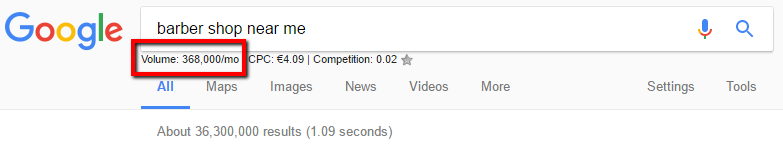

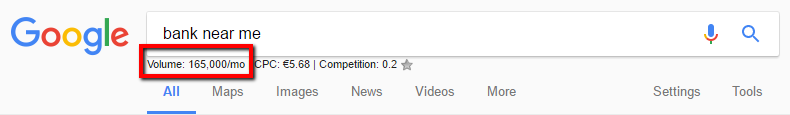

Dan Leibson at Local SEO Guide gives an excellent insight into a wide range of trends in the ‘near me’ vertical, check it out and take our advice, get on the ‘near me’ gravy train in 2017 when optimising your local business. Here are some examples of the sheer volume of searches ‘near me’ are currently getting in February 2017.

Crawlability Explained

We often get asked in Wolfgang Digital by prospective SEO clients, can we get ranking on Page 1 of Google? Why are our competitors there and we aren’t? They have the same content on their pages as we do, but they seem to get all the traffic.

Our first port of call is to examine some techy elements of the website to see if the search engines can crawl the site easily. We call this examining a website's crawlability.

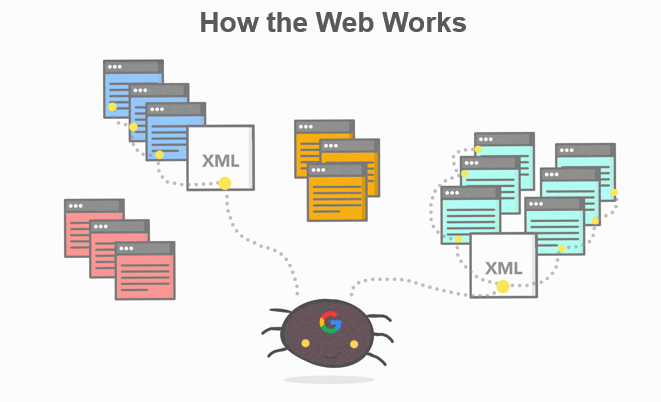

The next question website owners ask is: But what is crawlability? Basically, crawlability is the ability for search engine robots, to access and index all the pages on a website. Simply having a fancy looking website is not enough to rank in Google, the search engine requires a technically sound, easy to index website before they can present your website in the much-coveted Page 1.

As Gareth explained a few weeks ago to a packed house in 3XE 'Unbamboozling SEO', search engines, spend every minute of the day travelling the internet through links from one website to another. When they hit your website, you need to have the correct code in place that welcomes them. They then move through your website and save HTML versions of your pages in their mammoth servers (index). Every time the bots drop into your website, their index is updated and this is the version of your page that is presented in the SERPs.

As we don’t have a video of Gareth explaining how search engines work, here’s the next best explanation we could find on Google!! Mr Matt Cutts himself!

Put simply, website crawlability is its accessibility for search bots.

There are a number of ways a website can inadvertently block search engines, either blocking the full website, or just some pages.

A quick overview of potential blockers:

- robots.txt file

Code: User-agent: *

Disallow: /

Impact: The above code is a directive to search engines not to index the website.

- Robots Meta Tag

Code:

Impact: The search engine will not access or index the page, instead moving onto the next available page.

- No follow links

Code:

Impact: A nofollow link in the internal architecture of your site tells search engines not to follow the link, negatively impacting the ability to discover deeper pages.

- Broken links

Code: None

Impact: Each page the search bot is indexing (or attempting to index) uses up the crawl budget. There is a limit to a websites crawl budget, so it needs to be optimised.

- Spider Traps (Check out Luke’s Spider Trap blog here)

Code: None

Impact: Never ending loops, leading to an exhausted crawl and whole sections of a website never being indexed.

These are the top issues that can be negatively impacting on your website's crawlability. We would advise you check these out regularly, but don’t go crazy fiddling with the code as you may cause more damage than good. Contact Wolfgang Digital and we will conduct one of Ireland’s most thorough technical SEO audits and get your site ‘revvin’ like a 747!’

Until next month, wrap up warm, keep your chin up and make sure you are ticking as many boxes that the search engines use to rank your website. We'll have more info in March to keep you up to speed with our good friend Google.

Stay vigilant and don’t be a victim of a Penguin, Panda or Phantom in the spring and if you have noticed a sudden drop in traffic this February get in touch with us as soon as possible and our SEO team will help you with any animal wrangling or ghost hunting that needs doing.